Now that the move from WordPress to Pelican is complete, I'm starting to automate some of my site maintenance tasks. The first one I wanted to take on was backing up the site. My previous WP site was pretty automated, so I wanted to have the same "fire and forget" process here too.

There are three primary items I wanted to make sure were getting backed up right away:

- The original markdown files used to generate the site

- The current pelican configuration files

- The current website theme - which includes the CSS, template, and .js files

I looked a variety of options, but the one I'm going with for now is backing up to Dropbox.

Install Dropbox

My current setup is on a Linode1 box running Linux. So in order to back up my site to Dropbox, I needed to install Dropbox on my box. There are several guides on how to setup Dropbox. The most informative one I read was on dropboxwiki.com - Install Dropbox In An Entirely Text-Based Linux Environment. This gave a great background on getting Dropbox up and running on a Linux box.

One step I took that wasn't in the dropboxwiki.com article was using Dropbox's native command line script to run the Dropbox process. Dropbox's documentation apparently is not up to date with the script. The current version allows you to setup the daemon process by running dropbox.py -i. This will go through an install option and get the dropboxd process to run in the background.

Side note: I created a new Dropbox account just for backing up the site. I know there is no way I'll be hitting more than 2GB of files anytime soon. Plus, it keeps my personal stuff segregated from the website.

Setup shell script

The next step was to get a the files copied from various spots on the server into the Dropbox folder. This was pretty straight forward with the follow shell script:

1 2 3 4 5 6 | |

The last line will append a log file I have to monitor various activities and processes related to automating my site. As I find new folders that need to be backed up, I can just add them to the script and start copying them over to

Add shell script to crontab

The last step was to add the shell script as a cron task so it would run once a day. I simply added the time, user credentials, and script path to the existing crontab configuration.

# /etc/crontab: system-wide crontab

SHELL=/bin/sh

PATH=/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin

# m h dom mon dow user command

*/5 * * * * smithj099 /home/smithj099/site_regen_script.sh

0 4 * * * smithj099 /home/smithj099/pelican_backup.sh

17 * * * * root cd / && run-parts --report /etc/cron.hourly

25 6 * * * root test -x /usr/sbin/anacron || ( cd / && run-parts --report /etc/cron.daily$

47 6 * * 7 root test -x /usr/sbin/anacron || ( cd / && run-parts --report /etc/cron.weekl$

52 6 1 * * root test -x /usr/sbin/anacron || ( cd / && run-parts --report /etc/cron.month$

Backing up the backup

With every backup plan, there has to be a backup of the backup. In this case I have two redundant backups.

- Linode backup service: This was originally going to be my primary backup method (and probably should be considered as much). For a whopping $2.50/mo, Linode will do an automated backup of my box with incremental versions. No brainer. Yes. Easy. Done.

- Time Machine: Since I'm using Dropbox as one of the backup methods, I setup a 2nd user account on my Mac Mini and linked that user account to the website's Dropbox account. Since the files are essentially saved locally on my Mac Mini, I can use Time Machine to backup the Dropbox folder and keep redundant backups of the website files.

So now I have a backup in:

- Dropbox

- By my hosting service

- In my local Time Machine

3 different backups for a grand total of $2.50 per month.2

System Impact

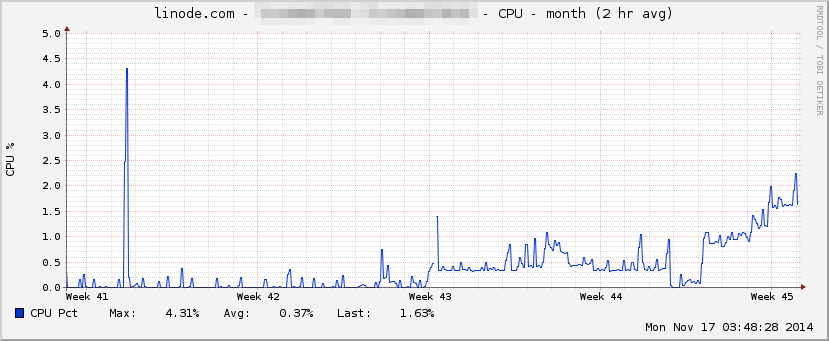

After all of the following updates after the full site switchover:

- Moving the primary domain over to Linode

- Adding the automatic site regeneration process

- The automated backup process

- Running the Dropbox daemon

I can say the performance of the site took a slight hit - CPU utilization went up almost 3x.

The best part

Now I can post to the site using Dropbox and some shell scripts. Look for a post on this soon.